Keras LearningRateScheduler Callback – Making Changes On The Fly

This post is the last of my 4 part series on Keras Callbacks. A callback is an object that can perform actions at various stages of training and is specified when the model is trained using model.fit(). In today's post, I give a very brief overview of the LearningRateScheduler callback.

So, what is a learning rate anyways? In the context of neural networks, the learning rate is a hyperparameter that is used when calculating the new weights during back propagation. For more details and the math involved, check out Matt Mazur's excellent discussion. The learning rate helps determine how much the weights change. Adjusting the learning rate can play a huge role in helping the network converge and lowering the loss value. If the learning rate is too high, the weights can fluctuate significantly between updates, causing the model to converge quickly resulting in a suboptimal solution. If the learning rate is too small, the weights will not fluctuate enough, causing the process to get stuck. In either case, your model will suffer. For a more detailed explanation of the impact of learning rate on neural network performance, check out Jason Brownlee's great post.

One powerful technique for training an optimal model is to adjust the learning rate as training progresses. Start with a somewhat high learning rate, then reduce it as the training progresses. Think of sanding wood. You start with coarse grit sandpaper to do some initial smoothing. You then continue to use finer and finer grit sandpaper until you have very smooth surface. If you just used coarse grit sandpaper, you would get a sanded surface, but it would not be smooth. If you used very fine grit sandpaper, you may or may not get a smooth surface, but it would have taken forever!

Keras provides a nice callback called LearningRateScheduler that takes care of the learning rate adjustments for you. Simply define your schedule and Keras does the rest. At a predetermined epoch of the training, the learning rate is adjusted by a factor that you decide. For example, at epoch 100, your learning rate is adjusted by a factor of 0.1. At epoch 200, it is adjusted again by a factor of 0.1 and so on. That's all there is to it. You can easily make adjustments to this schedule by updating the callback. You could even do a grid search to test multiple schedules and find the best model. As with most of the features of Keras, it is easy and straightforward.

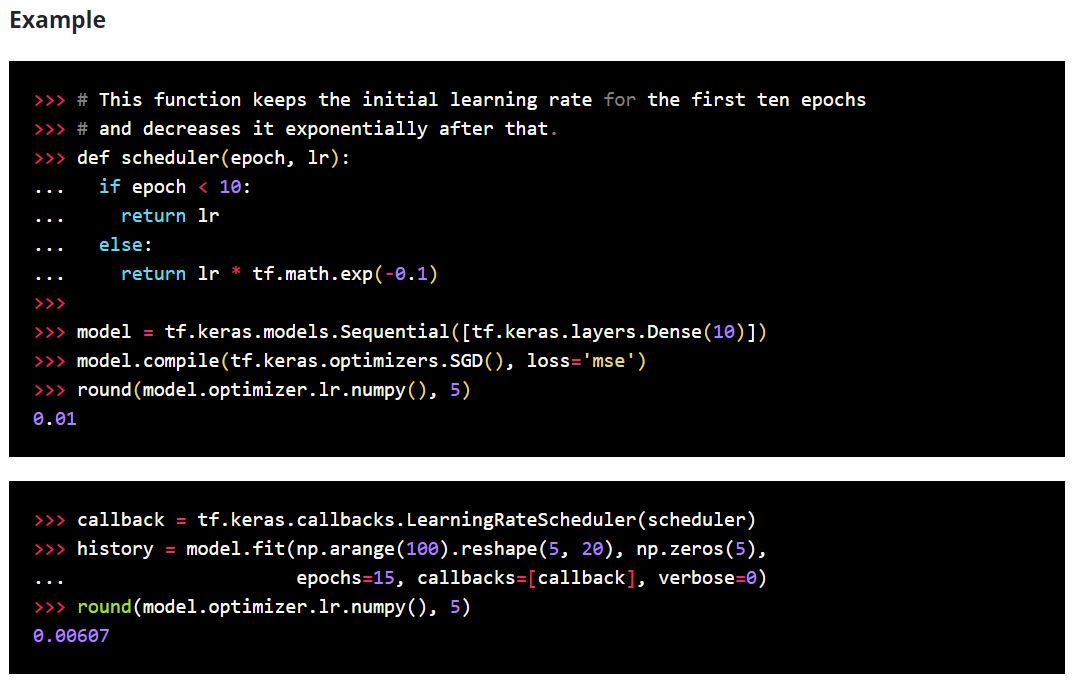

The syntax for the callback is below as well as some example code from the keras.io website.

I hope you have enjoyed this series on Keras Callbacks. Be sure to check out all 4 parts.

I hope you have enjoyed this series on Keras Callbacks. Be sure to check out all 4 parts.

Part 1 - Keras EarlyStopping Callback

Part 2 - Keras ModelCheckpoint Callback

Part 3 - Keras Tensorboard Callback

#artificialintelligence #ai #machinelearning #ml #tensorflow #keras #neuralnetworks #deeplearning #learningrate #hyperparameters #callbacks #learningratescheduler