Helm 101: Installing PostgreSQL in Kubernetes

Kubernetes has revolutionized the way in which modern workloads are built, deployed, and run in cloud and on-premise environments; however, until recently, there was no particularly elegant way to deploy applications in Kubernetes, and deployment often entailed many very manual steps.

Nowadays, however, many tools have been released to streamline the process of deploying applications onto Kubernetes clusters. One of them, likely the most popular and most widely adopted, is Helm. Helm acts as a package manager for Kubernetes, allowing for simple deployment and management of applications in a Kubernetes cluster.

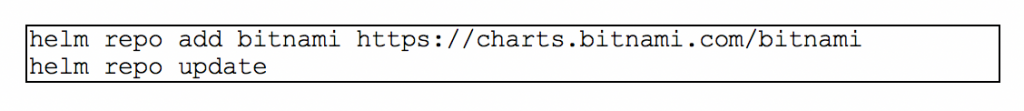

To install an application using Helm, first, the Helm repository where the Helm chart is hosted needs to be added. In the following example we will be installing PostgreSQL.

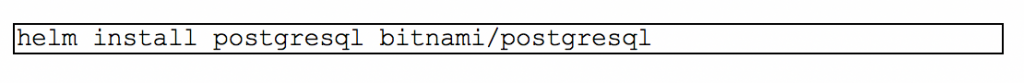

Once the repository is added, the application can be installed by running the following command:

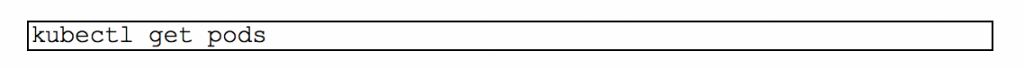

Helm will then confirm that the chart has been successfully deployed onto the Kubernetes cluster, and after a few seconds the application should be ready. The readiness of the application can be confirmed by listing the pods currently running in the cluster with the following command:

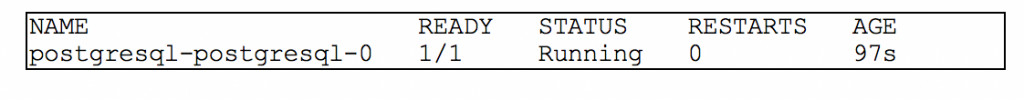

Once the application is ready, its pods should show up as Running, and all of its containers should show up as Ready:

The vast majority of Helm charts follow this same procedure for deployment; with perhaps some exceptions being the charts that install significantly larger applications, for which it may be necessary to override the chart values to configure the application according to its environment and whatever requirements there may be for it. These charts are generally really well documented, however, so, even though it might take a few extra minutes to write the configuration overrides, the deployment process should still take barely any time.

Deploying all applications in a Kubernetes cluster via Helm, as shown in the example above, allows for the simple deployment, management, and maintenance of all workloads running in the cluster; this ultimately allowing, in turn, for quicker iterations in a development environment, and increased resilience and stability in a production environment.