Cluster Autoscaler: 80 seconds could save you 80% or more on your cloud costs!

If you’re scaling up in the cloud or running at scale in the cloud, you’ve probably come to a conclusion many have… it’s much easier to get started and to ramp, but it can very expensive when running in Production at scale. The cost can be especially daunting if you’re using accelerated compute options, like GPUs for AI/Machine Learning, which can be extremely expensive… 3x or 4x or even more at scale when compared to a non-cloud scenario.

When using GPUs or accelerators for AI/ML/DL tasks and using Kubernetes as the container orchestration strategy (a common combination), we’ve found that implementing the Cluster Autoscaler capability for GPU nodes can save up to 80%+ in GPU costs (and often 95%+) on when running AI/ML/DL training jobs on Azure and AWS.

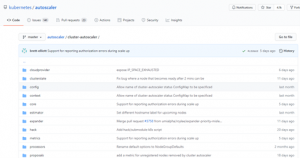

https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler

What is Cluster Autoscaler? Simply put, it’s a tool that automatically adjusts the size of a Kubernetes cluster (i.e. compute services that you get billed for) when you: 1) need more nodes due to insufficient resources, and 2) when you have underutilized nodes for a specified period of time that can be spun down.

In this case, Cluster Autoscaler can spin up a net new GPU node when you need to run a training job and quickly spin down the GPU node after your training job is finished. This spin down feature is called “scale-down” and has a default setting of 10 minutes before the unused node is terminated (this setting is configurable via the –scan-interval flag based on how your app runs).

We ran some side-by-side comparisons (Cluster Autoscaler vs. no Cluster Autoscaler) with an app built on Kubernetes that dynamically runs anywhere between 1 to 10 training jobs in parallel for anywhere between 10 minutes to 2 hours each and found that we saved 90%+ in GPU compute node costs, simply by implementing this Cluster Autoscaler as part of our architecture and strategy.

Since the app we’re using is specific to our use case and needs to be hybrid, multi-cloud, and portable in where it can run (i.e. anywhere with Kubernetes and with no lock-in to proprietary services of the various cloud providers), this is something that was and will be a game changer for us and the clients we advise and support around their AI/ML and Kubernetes strategies.

More to come on Cluster Autoscaler in a future blog…. If you need assistance or guidance on implementing this for your K8S app in the cloud or in a hybrid scenario, let us know as always, we’ve put in the extensive time and effort in proving this out so you don’t have to!